Since the surge of programs such as ChatGPT and Dall-E in 2022, AI has become an increasingly larger part of peoples’ daily lives. More recently, corporations have started releasing AI products in order to capitalize off of this emerging technology.

However, the success of implementing AI technology varies, since it’s still developing. According to MIT Sloan, “Content generated by AI tools like ChatGPT, Copilot, and Gemini have been found to provide users with fabricated data that appears authentic. These inaccuracies are so common that they’ve earned their own moniker; we refer to them as ‘hallucinations.’” Hallucinations occur for multiple reasons, such as the fact that generative AI is still developing, and its focus on copying patterns rather than accuracy.

Since AI has its flaws and inaccuracies, companies trying to push out a new program and jump on the trend are more likely to produce a flawed product.

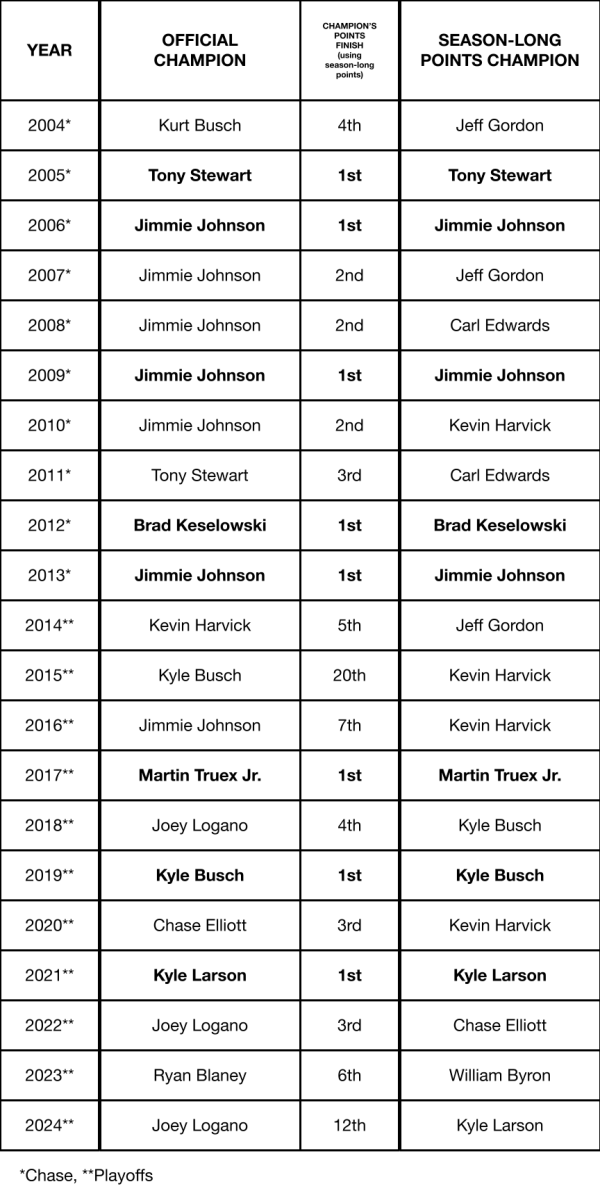

Google AI Overview changes the search

Over the summer of 2024, Google’s AI overview feature was mocked by many internet users for inaccurate responses, such as adding glue to tomato sauce when making pizza to help the cheese stick and “give it more tackiness,” and attributing health benefits to eating rocks on a daily basis. The former cited an 11-year-old Reddit comment, and the latter sourced a satire article.

Liz Reid, the Head of Google Search, stated in a Google blog that these responses appeared “generally for queries that people don’t commonly do.” This is reasonable for the question “how many rocks should I eat,” though Reid did not address the bizarre response to the prompt “cheese not sticking to pizza,” which, considering how the internet is used to provide recipes and culinary advice, seems to be a relatively normal Google search.

Microsoft Recall AI follows suit

Around the same time, Microsoft announced the release of the feature “Recall AI.” In his article published by Information Age, the news outlet for the Austrailian Computer Society, Denham Sadler explains that its main purpose involves “constant taking of screenshots of the computer to help the user remember something they were doing at a certain time, sometimes months ago.”

Concerns arose, because if someone was able to access the databases that these screenshots go to, it’d be a severe invasion of privacy. The frequent screen captures would essentially reveal all of the activity on the device, and even worse, can include bank information, passwords, or other sensitive data that someone may type into their computer.

Microsoft has since delayed the product’s debut, and in a more recent article, stated that Recall AI comes with a setting enabled that “helps filter out snapshots when potentially sensitive information is detected—for example, passwords, credit cards, and more.”

Both Microsoft and Google have generally fixed the major issues with their generative AI technologies. However, with generative AI in such an early stage of development, it can still carry certain risks.

Data Scientists say to proceed with caution

Allison Shull, a data scientist at Stantec, describes the use of AI to help with creating proposals, since they can reach up to hundreds of pages long. Previous proposals are sent to the AI, however, this has to be done with caution.

“But what are they doing with the documents that you sent to them? Now that’s a risk, right? You can’t go send the department of defense bid to a random API online and let it turn into numbers and assume that your data is secure. I mean, you might have revealed essentially government secrets to some website,” Shull said.

Furthermore, since AI isn’t entirely centered on accuracy, its assistance works best when helping to construct a rough draft for these proposals, though it may require more attentive editing.

“The sort of risk associated with it is that you have to do your due diligence and review what is written. Because at the end of the day, the people whose names are on the bottom of the document who have signed off on it, they are liable and responsible for the content of that document,” Shull said.

Though, this emerging artificial intelligence has potential, and it has helped the company develop a flood predictor that uses geographic information.

“It uses a machine learning model, which you could call AI, to process those features and predict, you know, this area is going to flood and this area is not going to flood depending on how much rainfall there is,” Shull said.

How AI is affecting education

Though, with generative AI in the hands of essentially anybody, it begins to create problems, especially in a school setting.

At Parkway Central, artificial intelligence has not been involved in serious incidents. Chris Kaatman, an officer working at PCH, states that,

“I mean, I think how it should be used is probably just as another resource or source tool. I’m sure, I don’t know of any specific instances, but I can’t imagine that it hasn’t been used improperly by students to write a paper or do a project,” Kaatman said.

This use of generative AI to complete assignments primarily impacts educators. Libby Reed, an English teacher at Parkway Central, describes the issues that the technology has brought to English education,

“Well, it’s certainly been something kind of like when the internet started or when the internet became popular and everybody had it in schools and on their computers and everything, we were trying to make sure kids were still reading the books and not using SparkNotes or whatever. So it just adds another layer of complexity to making sure that what we’re seeing is really student work,” Reed said. “And I think that we do a pretty good job giving kids the opportunity to do their own work and to really show what they know how to do and not have to rely on the internet to do it for them.”

English mainly centers around written assignments, so it was the most impacted by the surge in generative AI. Though, the technology has affected teaching as a whole. PCH Principal Tim McCarthy outlines the problems it has posed,

“AI is still very much an evolving technology. So I think part of what we’re still dealing with and navigating is that initial concern that is the student work or is the work that students are putting forward is that authentically their own,” McCarthy said.

Only two years have passed since generative AI has become mainstream, so its capabilities in helping education may still be uncovered.

“I think the next question is how can AI change what we do? How can it enhance what we do? And I think that’s still very much evolving,” McCarthy said.

Overall, the future of this kind of AI is still uncertain. In the past, new technology has been received with concern, such as the radio or television. Though, with the case of AI, its wide range of capabilities and accessibility seem to create much more concerning problems in comparison.